Friends & family. Puppies. Pittsburghese. Sunday mornings. Rainy summer evenings. Hugs. Books. Driving with the windows down. The Beatles. Trail runs. Pumpkin patches. The brain.

Lots of things make me happy – but few things feel as wonderful as showing appreciation for the kindness, love, or courtesy extended by another person.

My Buddha Doodles Gratitude Journal by Molly Hahn (Mollycules)! I love the art in this journal and there is plenty of space provided to write down positive experiences from each day.

Gratitude, originating from the Latin word gratus meaning ‘thankful’, is a powerful moral sentiment that psychologists have shown significantly influences overall happiness. In the simple act of being thankful, many mental, social, and physical benefits follow in tow.

Earlier this year I came across the SoulPancake video titled “The Science of Happiness – An Experiment in Gratitude”. The video explored a result from a 2005 study that investigated the effectiveness of several positive psychology interventions on prolonging happiness over several months. These interventions included exercises in gratitude and building positive self-awareness.

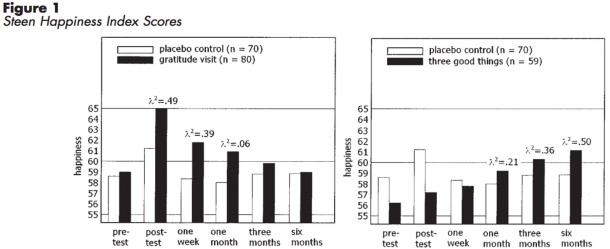

Part of the original 2005 study directed participants to complete a questionnaire that established their general level of happiness. Following the survey, they were instructed to write and deliver a letter of gratitude to someone who had been especially kind toward them but had not been properly thanked.

One week after the letter, participants reported being happier and less depressed. This particular exercise in reflection and expression of gratitude created the largest happiness boost out of all the activities assigned in the study. In other words, telling someone how much you appreciate them produces the most happiness bang for your buck.

In addition, these positive vibes were maintained through the one-month study follow-up, but did not last until the 3-month follow-up – so practice gratitude often!

A separate group of study participants were given the task to journal about three good things that happened each day. This group reported being happier than before the study and they stayed happier at the 3- and 6-month study follow-ups. Additionally, the happiest people journaled about their good experiences frequently.

Hence, my 2014 New Years Resolution is keeping a gratitude journal. Bring on the gratitude glow!

Gratitude letter writing (left) & journaling 3 good daily things (right)

effect on happiness over 6 months. Seligman et al. 2005

It may not be shocking, but grateful people take better care of themselves and see mental and physical benefits on top of enhanced happiness. Other studies have revealed that the practice of gratitude is associated with better sleep, decreased anxiety, and improved exercise habits.

Although with too little sleep, the positive effects of gratitude no longer benefit anxiety. So be grateful and get those nighttime Z’s!

Expressing gratitude has the ability to alter happiness and impact our physical well-being, but what brain centers respond to moral sentiments of gratitude?

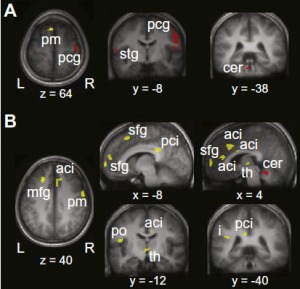

In 2009, the NIH conducted an fMRI study assessing cerebral blood flow – thought to be a measure of neural activity in the brain – while subjects were shown positive word pairings to trigger feelings of gratitude. The more gratitude a participant experienced, the more active their hypothalamus became. Because the hypothalamus is an important brain region for body temperature, sleep, metabolism, hunger, and the body’s stress response, it’s logical that expressing gratitude can lead to improvements in sleep, depression, and anxiety.

Gratitude. It’s the reason for the season. But in 2014 let’s celebrate and express gratitude not only during the holidays but frequently throughout the year. I’d like to start this year by saying how thankful I am to you, The Synaptic Scoop readers. You make writing this blog a joy. Thank you and have a very Happy New Year filled with lots of gratitude!

Seligman M.E.P., Steen T.A., Park N. & Peterson C. (2005). Positive Psychology Progress: Empirical Validation of Interventions., American Psychologist, 60 (5) 410-421. DOI: 10.1037/0003-066X.60.5.410

Zahn R., Moll J., Paiva M., Garrido G., Krueger F., Huey E.D. & Grafman J. (2009). The Neural Basis of Human Social Values: Evidence from Functional MRI, Cerebral Cortex, 19 (2) 276-283. DOI: 10.1093/cercor/bhn080